Introduction

For many years Oracle Fusion Middleware based on WebLogic server has been capable of being used to provide high availability, fault tolerance and disaster recovery capabilities. This has been documented as part of the Maximum Availability Architecture whitepapers. Follow this link for all the MAA documentation or follow this link to go directly to the Fusion Middleware Disaster Recovery architecture documentation.Exalogic/Exadata provides an ideal platform on which these architecture can be realised with all the advantages that come with using Oracle Engineered systems.

This blog posting gives a very high level overview of the principles used in implementing active/passive DR for a fusion middleware application. Much of the activity involved from an application perspective is identical irrespective of the deployment being on physical or virtual hardware. In this article we will have a slightly deeper dive on how the Exalogic ZFS storage appliance is used to enable the DR solution.

Basic principles involved in setting up FMW DR

The basic tenet of deploying an application is to follow a set of rules during the deployment/configuration of the application which will make it simple to start the application up on the DR site. The setup should be:- Deploy all tiers of the application ensuring:-

- In the primary environment a set of hostname aliases are used for all configuration, these aliases not linked to the specific host and all configuration in the products specify these names rather than actual IP addresses.

- The binary files and application configuration (normally the domain homes) are all located as shares on the ZFS appliance and mounted via NFS to the Exalogic vServers.

- Critical application data that must be persisted goes into the Database. Specifically thinking of the WebLogic Transaction logs and the JMS messages. (We will use the Oracle Data Guard product to ensure critical data is synchronously copied to the remote site)

- Keep the configuration in the Operating System to an absolutely minimum possible. Probably no more than /etc/hosts entries and if needed specific service startup commands. Other OS configuration should be built into the templates used to create the environment in the first place.

- Create mirror vServers on the DR site.

- These vServers will be used to host the production environment when DR has occurred. The same minimal OS configuration should be present in this site. To save time in DR the servers can be started up or they can be started on-demand at DR startup. If already running then ensure that the application services are all shutdown. The hosts files must have the same hostname aliases in it that the primary site has but obviously they will be resolving to different IP addresses.

- Create a replication agreement for all the shares that host the application binaries and domains.

- When DR is to happen (ignoring DB)

- Break the replication agreement

- Export the replicated shares so that they can be mounted.

- Mount the replicated shares in exactly the same location on the DR vServers

- Startup the application on the DR environment

- Test and if OK then redirect traffic at the front end into the DR service.

All these steps can be automated and put into the control of Enterprise Manager such that the element of human error can be removed from the equation during a disaster recovery activity.

Using the ZFS Storage Appliance for Replication

From the application perspective a key function lies with the NAS storage which has to be able to copy an application from one site to another. The ZFS Storage appliance within an Exalogic is a fantastic product that provides exactly this functionality. It is simple to set it up to copy the shares between sites.Setup a Replication Network between sites

The first activity required when wishing to perform DR between two sites is to create a replication network between the ZFS appliance in both Exalogic racks. This can be done using the existing 1GbE management network that already exists, however this is not recommended as this network is not fault tolerant, there being only one 1GbE switch in the rack. However on the ZFS appliance there are two 1/10GbE network connections available on the back of each storage head (NET2 & NET3). By default one connection goes into the 1GbE switch and the other is a dangling cable, thus two independent routes into the data centre are available. If a longer wire is required to connect then it is possible to disconnect the existing ones and put in new cables. (Recommendation - Get Oracle Field Engineers to do this, it is a tight squeeze getting into the ports and the engineers are experts at doing this!)Once each head is connected via multiple routes to the datacenter and hence on to the remote Exalogic rack then you can use link aggregation to combine the ports on each head and then assign an IP address which can float from head to head so it is always on the active head and hence has access to the data in the disk array.

Replicating the shares

Having setup the network such that the two storage appliances can access each other we now go through the process of enabling replication. This is a simple case of setting up the replication service and then configuring replication on each project/share that you want coped over. Initialy setup the remote target where data will be copied to. This is done via the BUI, selecting Configuration, and then the Remote Replication. Click on the + symbol beside "Targets" to add the details (IP address and root password) of the remote ZFS appliance. |

| Adding a replication target |

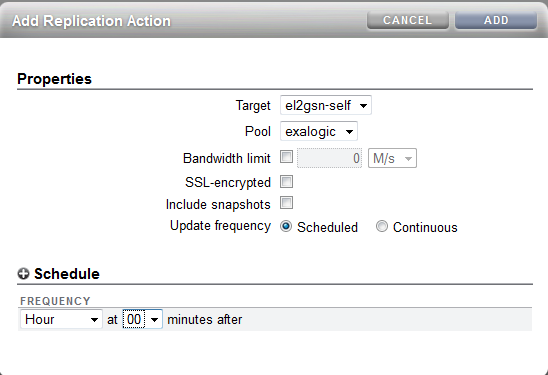

To setup replication using the BUI simply click on the Shares and either pick a share or click on the Projects and edit the project level. There is then a replication sub-tab and you can click on the "+" symbol to add a new "Action" to replicate.

|

| Replication of a project |

Obviously the latter two options have an impact on the quantity of data copied and the performance is worse if all data has to be travelling encrypted. However, after the initial replication only changed blocks will be copied across and given that the shares are used primarily for binaries and configuration data there will not be a huge quantity flowing between the sites.

Process to mount the replica copies

Having completed the previous steps we have the binaries and configuration all held at the primary site and a copy on the remote site. (Although bear in mind that the remote copy may be slightly out of date! It is NOT synchronous replication.) For DR we now assume that the primary site has been hit by a gas explosion or slightly less dramatic we are shutting down the primary site for maintenance so want to move all services to the DR environment. The first thing to do is to stop the replication from the primary site. If the DR environment is still running then this is as simple as disabling the replication agreement. Obviously if there is no access to the primary then one must assume that replication has stopped.Then on the DR site we want to make the replicated shares available to the vServers. This is acheived by "exporting" the project/share. To navigate to the replica share simply select the Shares and then the Projects listing or Shares listing appropriately. Under the "Projects" or "Filesystems : LUNs" title you can click to see the Local or Replica filesystems. By default the local are shown so click on Replica to see the data coped from a remote ZFS appliance.

|

| Replicated Projects |

Under the General tab there is the option to "Export", simply select this check box and hit apply and the share will be available to mount by the clients. By default the same mount point that was on the primary site will be used on the DR site.

| Health Warning : When you export a project/share then all shares with the same directory mount point are re-mounted on the client systems. Make sure every project has a unique mount point. If left at the default of /export then the Exalogic Control shares are also re-mounted which has the impact of rebooting compute nodes. |

|

| Export checkbox to enable share to be mounted |

Once the shares have been exported then the DR vServers can mount the shares, start the application services up and be ready to pick up from the primary site. Finally, create the replication agreement to push data from the DR site back the primary until the failback happens to the primary site.

All the steps for DR once the environment has been correctly setup only take in the order of seconds to complete so the outage for the DR switchover can be taken down to seconds for the technical implementation aspects.