Overview

This posting provides an overview of how Enterprise Manager 12c has been integrated with Exalogic. It will then dive into the installation process, providing an overview of the activities that will compliment the documentation. (For integrating with

Exalogic 2.0.6.0.n read this posting in conjunction with the details shown here.)

The versions used in this example are:-

- Virtualised Exalogic - 2.0.4.0.2

- Enterprise Manager (EM12c) - 12.1.0.2.0

Oracle Enterprise Manager is a powerful tool that can be used to manage a large enterprise compute facility, looking after both the hardware and the software running. (apps to disk management) As a model the normal operation is a central managed service or Oracle Management Service (OMS) that consists of an application hosted in WebLogic server with an underlying database to hold configuration and state information. It communicates with the services it manages via an agent that is deployed onto each operating system. Via the use of plugins and extensions it has specific knowledge of each environment and hence can present an appropriate monitoring and management screen.

For Exalogic there are several plugins that enable it to create a powerful view onto the rack and monitor it from apps to disk. These plugins include:

- ZFS Storage - A specific plugin allows EM12c to communicate with the ZFS appliance within the Exalogic rack to monitor the status of the storage.

- Virtualisation - A plugin allows communication with the Oracle Virtual Machine Manager system used in Exalogic to provide details of how the virtual infrastructure is deployed and a view onto each virtual machine (vServer) created.

- Exalogic Elastic Cloud/Fusion Middleware - This plugin links in with the Exalogic Control infrastruture and gives information on the state of the physical environment. It also links into agents deployed onto the vServers and provides a central view on the middleware software that can be deployed onto Exalogic. (Built in understanding of Weblogic domains, applications deployed, Oracle Traffic Director installations and Coherence clusters.)

- Engineered Systems Healthchecks - A plugin that integrates with the exachk scripts to highlight any configuration inconsistencies.

The diagram below depicts a deployment topology for EM12c to monitor Exalogic. There are more complex options available to make EM12c highly available and to manage firewalls and proxying of communications. This blog posting is only really considering a basic installation for managing Exalogic.

|

| OMS Deployment to monitor and manage an Exalogic rack |

There are plenty of alternate network configurations and deployment options that could be considered, the key thing is that the OMS server should have a network path to both the Exalogic Control vServers (OVMM & EMOC) and to the client created vServers that will be running the applications.

For example, in a purely test setup we have in the lab we actually run the OMS and OMS repository in a vServer on the Exalogic rack and make use of the IPoIB-virt-admin to give the OMS server suitable access to all the vServers on the rack. This is great for test and demonstration purposes but in a large enterprise it is likely that the Enterprise Manager configuration will sit externally to the Exalogic.

This posting assumes that you already have an instance of Enterprise Manager 12c operational in your environment.

Details on the installation process can be found in the documentation. This posting will continue to consider all the steps involved in configuring EM12c to monitor the Exalogic rack.

The installation documentation can be found here :-

EM12c Exalogic Configuration

As an overview the process is:-

- Get the correct versions of the software (plugins & EM12c) installed

- Deploy agents onto the OVMM & EMOC vservers in the Exalogic Control stack

- Deploy the ZFS Storage appliance plugin to monitor the storage

- Deploy the Exalogic Elastic Cloud plugin to get the Exalogic monitored.

- Deploy the Oracle Virtualization plugin to monitor the OVMM environment

- If deploying hosts onto the vServers setup your vServers as needed

- Optional - Deploy the Engineered System Healthchecks

Prerequisites

The process of installing/configuring the various components to allow the Exalogic to be monitored in EM12c involves a number of pre-requisites activities.

Ensuring you get the correct plugins

EM12c makes heavy use of plugins. Plugins are managed from the Extensibility menus. (Setup --> Extensibility --> "Self Update" or "Plug-ins")

If

you have setup your EM12c instance in a network location that has

access to the internet then you can automatically pick up the Oracle

plugins from a well known location. Simply click where it says "Online"

or "Offline" beside the Connection Mode under the Status in the Self

Update page. If you are not able to access the internet then use the

Offline mode and on the tab it shows the location for the

em_catalog.zip, download this, move it to the OMS server and then

Browse/Upload the file or make use of the command line (# emcli import_update_catalog -file <path to zip> -omslocal)

Once uploaded, on the "Plug-ins" page ensure that the following plugins are download and "On Management Server"

- Oracle Virtualisation (12.1.0.3.0)

- Note

- This is not the most recent version as there is an incompatability

with 12.1.0.4.0 and the OVMM instance that runs as part of Exalogic

control. If you have 12.1.0.4.0 already deployed then undeploy it from

the OMS instance.

- Exalogic Elastic Cloud Infrastructure (12.1.0.1.0) - Not required for Virtual monitoring as the fusion middleware monitoring incorporates Exalogic. Necessary for monitoring of a physical Exalogic rack.

- Oracle Engineered System Healthchecks (12.1.0.3.0)

- Not

necessary for the general system monitoring but allows visibility and

control over running exachk, the health checking tool for Exalogic.

- Sun ZFS Storage Appliance (12.1.0.2.0)

Deploying Agents to EMOC & OVMM

For

full integration with EM12c it is necessary to have agents deployed to

both the OVMM and EMOC vServers. The agent binaries have already been

deployed to the control vServers but as EM12c does all the deployment

itself it is actually simpler to use the facilities of em12c to deploy

into a new directory. As such the following instructions will deploy

the agents onto the rack:-

- Ensure you have an oracle user and known password on the vServers. (oracle as a user is already present and as root use passwd to change the password to a known value.

- Create a directory to host the agent. eg.

# mkdir -p /opt/oracle/em12c/agent

- Make the directory for the agent owned by the oracle user. (Check the group ownership on each vServer, on the OVMM the oracle user is in the dba group while on EMOC it is in the oracle group.)

# chown -R oracle:oracle /opt/oracle

- If the vServers are not setup for DNS then ensure that the fully qualified hostname for the OMS server is included in the /etc/hosts file.

- Add the Exalogic info file to the template.

On the hypervisor (OVS) nodes of the Exalogic rack is an identifier file that specifies the rack identifier. The file is /var/exalogic/info/em-context.info. In the template create an equivalent directory structure and copy the em-context.info file into this directory.

- Make a symbolic link from the sshd file in /etc/pam.d to a file called emagent. (Allows actions to be perfomed on the vServer using credentials managed in LDAP. - See MOS note How to Configure the Enterprise Management Agent Host Credentials for PAM and LDAP (Doc ID 422073.1) for more detail)

# cd /etc/pam.d

# ln -s sshd emagent

- Make the necessary changes to the sudoers configuration file (/etc/sudoers)

- Change Defaults !visiblepw to Defaults visiblepw

- Change Defaults requiretty to Defaults !requiretty

- Add the sudo permissions for the oracle user as shown below

oracle

ALL=(root)

/usr/bin/id,/*/ADATMP_[0-9][0-9][0-9][0-9]-[0-9][0-9]-[0-9][0-9]_[0-9][0-9]-[0-9][0-9]-[0-9][0-9]-[AP]M/agentdeployroot.sh,

/*/*/ADATMP_[0-9][0-9][0-9][0-9]-[0-9][0-9]-[0-9][0-9]_[0-9][0-9]-[0-9][0-9]-[0-9][0-9]-[AP]M/agentdeployroot.sh,/*/*/*/ADATMP_[0-9][0-9][0-9][0-9]-[0-9][0-9]-[0-9][0-9]_[0-9][0-9]-[0-9][0-9]-[0-9][0-9]-[AP]M/agentdeployroot.sh,/*/*/*/*/ADATMP_[0-9][0-9][0-9][0-9]-[0-9][0-9]-[0-9][0-9]_[0-9][0-9]-[0-9][0-9]-[0-9][0-9]-[AP]M/agentdeployroot.sh

- Now deploy the agent onto the vServer from the Enterprise Manager console.

Setup --> Add Targets --> Add Target Manually & select the Add Host.... option and follow the wizard.

Setup to monitor the ZFS appliance

The ZFS appliance is monitored via an agent deployed to a device that has network access to the appliance, on an Exalogic the recommendation is to use the EM12c agent deployed to the Exalogic Control EMOC vServer.

Before setting up the

monitoring in EM12c we have to run through a "workflow" on the ZFS

storage appliance itself that will setup a user with appropriate

permissions to monitor the appliance. The agent hosting the ZFS Storage plugin will then communicate with the ZFS appliance as this user to gather details on the current operation.

To achieve this log onto the ZFS

BUI as the root user and navigate to

"Maintenance" --> "Workflows" Then run the workflow called "

Configuring for Oracle Enterprise Manager" which will create the user and appropriate worksheet to allow the monitoring of the device.

|

| Enabling the ZFS Storage for EM12c monitoring |

This activity to create the user must be repeated on the second/standby storage head although it is not necessary to recreate the worksheet on the second head.

Once

complete then on the Plugin's management page (Setup -->

Extensibilty -->Plug-ins) deploy the ZFS Storage Appliance plugin to

the OMS instance and then to the EMOC agent. You can then configure

up the ZFS target, this is done via the Setup menu.

- Setup --> Add Target --> Add Target Manually

- Select "Add Non-Host Targets by Specifying Target Monitoring Properties"

- Select the Target Type of Sun ZFS Storage Appliance & select the EMOC monitoring agent.

- In

the wizard give the name you would like the appliance to appear as in

the EM12c interface and supply the credentials and IP address for the

device. (Use the IB storage network, not the public 1GbE network IP.)

Once

this completes it is possible to select the target for the storage

appliance and view details on the shares created and the current usage

of the device.

|

| Monitoring of ZFS Storage Appliance |

Deploying the Exalogic Infrastructure Plugin

There are a couple of steps to getting the environment setup for the Exalogic infrastructure plugin to operate correctly.

- Sort out the certificates so that the agent can communicate with the Ops Centre infrastructure of Exalogic Control

- Deploy/configure the plugin.

Managing the EMOC certificates

The

first step is to ensure that the EM12c agent can communicate with the

Ops Centre instance which is only available over a secure communications

protocol. Because it uses a self-signed certificate it is necessary to

include this certificate in the trust store of the agent.

- Export

the certificate from the Ops Centre keystore. This is the keystore

that is in the OEM installation on the ec1-vm vServers.

(/etc/opt/sun/cacao2/instances/oem-ec/security/jsse) It is possible to

use the JDK tools to extract the certificate.

# cd /etc/opt/sun/cacao2/instances/oem-ec/security/jsse

#

/opt/oracle/em12c/agent/core/12.1.0.2.0/jdk/bin/keytool -export -alias

cacao_agent -file oc.crt -keystore truststore -storepass trustpass

Note 1 - The default password for the EMOC truststore is "trustpass". Others have mentioned that the password was "welcome". If trustpass does not work try out welcome.

Note

2 - We explicitly use the keytool version that is shipped with the

Oracle EM12c Agent (Java 1.6). The default version of java on the

Exalogic Control vServer is java 1.4 and running the 1.4 version of

keytool against the truststore will result in the following error:-

# keytool -list keystore truststore

Enter key store password: trustpass

keytool error: gnu.javax.javax.crypto.keyring.MalformedKeyringException: incorrect magic

- Import

the certificate you just exported into the agent's trust store. Ensure

you import into the correct AgentTrust.jks file, specifically the one

for the agent instance you are using and not (as the docs currently

state) the copy in the agent binaries.

# cd /opt/oracle/em12c/agent/agent_inst/sysman/config/montrust

#

/opt/oracle//em12c/agent/core/12.1.0.2.0/jdk/bin/keytool -import

-keystore ./AgentTrust.jks -alias wlscertgencab -file

/etc/opt/sun/cacao2/instances/oem-ec/security/jsse/oc.crt

Deploying the Exa Infrastructure Plugin

There are a number of steps to getting the Exalogic Infrastructure plugin to monitor the rack.

- Deploy the Exalogic Elastic Cloud Infrastructure to the OMS server. (Setup --> Extensibility --> Plug-ins, select the Exalogic Elastic Cloud Infrastructure and from the actions pick "Deploy on >" & "Management Servers" )

- Deploy

the plugin to the Ops Center (EMOC) vServer. Once the plugin has been

deployed successfully to the OMS instance then the same options as above

but select to "Deploy on >" & "Management Agent..." and select the EMOC host agent.

- Now we want to run the Exalogic wizard to add the targets for the Exalogic rack itself. This is done via the Setup --> Add Target --> Add Targets Manually options. Then select "Add Non-Host Targets Using Guided Process (Also Adds Related Targets)", pick the Exalogic Elastic Cloud and click on "Add Using Guided Discovery" which will show the wizard as pictured below.

|

| Discovery of Exalogic Elastic Cloud |

This wizard appears to finish quickly and it is then possible to select the Exalogic from the Targets

menu, however the system will be initialising and synchronising in the

background so it takes a few minutes to get the full rack discovered.

Once present the screenshots below show the monitoring of the hardware

with a general picture for the rack and a couple of shots to show the

Infiniband Network monitoring.

|

| Exalogic Monitoring - Hardware view |

|

| Monitoring the Infiniband Fabric |

|

| Monitoring an Infiniband Switch |

Deploying the OVMM Monitoring

The

first thing to ensure is that the plugin version that is installed is

the 12.1.0.3.0 version of Oracle Virtualization. The steps are similar

to the steps for the Exalogic Infrastructure Plugin.

However prior to doing the deployment to Enterprise Manager the OVMM server should be setup to be read-only for the EM12c monitoring agent to use. Follow these steps on the OVMM server to setup a user as read only.

Login to Oracle VM Manager vServer as oracle user, and then perform the commands in the sequence below.

-

cd /u01/app/oracle/ovm-manager-3/ovm_shell

-

sh ovm_shell.sh --url=tcp://localhost:54321 --username=admin --password=<ovmm admin user password>

-

ovm = OvmClient.getOvmManager ()

-

f = ovm.getFoundryContext ()

-

j = ovm.createJob ( 'Setting EXALOGIC_ID' );

The EXALOGIC_ID can be found in the em-context.info on dom0 located in the following file path location:

/var/exalogic/info/em-context.info

You must log in to dom0 as a root user to obtain this file. For example, if the em-context.info file content is ExalogicID=Oracle Exalogic X2-2 AK00018758, then the EXALOGIC_ID will be AK00018758.

-

j.begin ();

-

f.setAsset ( "EXALOGIC_ID", "<Exalogic ID for the Rack>");

-

j.commit ();

-

Ctrl/d

Now deploy the OVMM virtualisation plugins to the OMS server:-

- Deploy the Oracle Virtualization plugin to the OMS server

- Deploy the Oracle Virtualization plugin to the agent running on the OVMM server.

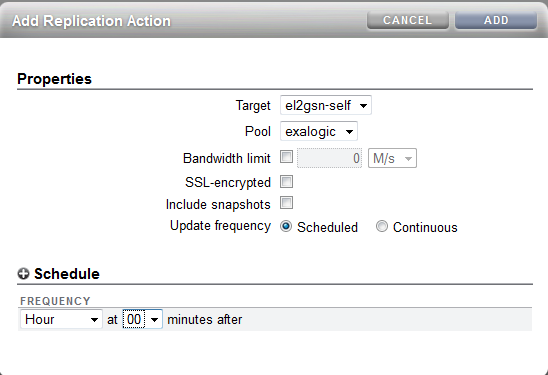

- Run the add target wizard for the Oracle VM Manager.

- Setup --> Add Target --> Add Target Manually

- Select

the "Add Non-Host Targets by Specifying Target Monitoring Properties"

& Chose the target type of "Oracle VM Manager" and the monitoring

agent for the OVMM server host.

- Enter the details on the wizard page (example shown below)

- Submit

the job, wait a few minutes to allow the discovery to progress and then

you can view the Target under Systems or all targets.

|

| Running the discovery wizard for the Exalogic Virtualised Infrastructure |

Deploying the Engineered System Healthchecks

Both

Exalogic and Exadata have a healthcheck script that can be run -

exachk. On Exalogic the script can be downloaded from

My Oracle Support

and when run against an Exalogic rack it will check the configuration

of the rack. The running of

exachk will create output files that detail

any issues found with the rack. To integrate with Enterprise Manager

it is necessary to change the behaviour of exachk to output files in an

XML format that can be parsed by the EM12c plugin and presented to the

OMS server in a format that it can understand and present on screen.

To modify the behaviour simply set an environment variable prior to

running the exachk script -

export RAT_COPY_EM_XML_FILES=1. You can

also use the

RAT_OUTPUT=<output directory> to direct the output to

a specific location. (The default behaviour is to put the output into

the same directory as the exachk script is run from.

The recommendation for a virtual Exalogic is to run the exachk utility on the EMOC vServer.

To

install the plugin simply ensure that the "Oracle Engineered System

Healthchecks" plugin is downloaded and installed onto the OMS server and

to the agent deployed to the EMOC server. Then

create the target as per the OVMM mechanism. The wizard for the

healthcheck simply requests the directory on the server where the output

will be read from and the frequency of checking for new versions of the

exachk output. (Default is 31 days.) Then setup the EMOC server to run the exachk on a regular basis. The output becomes available via the EM12c console and hence can be made available to specific users who may not actually have access to the rack itself.