Introduction

Right from the outset of Exalogic it has depended heavily on the Infiniband network interconnect to link all the components together. With the release of the virtualised Exalogic and all the multi-tenancy features the flexibility of the Infiniband fabric to provide secure networking is critical. This posting is an attempt to explain some of the underlying operations of the Infiniband network and how it provides secure networking capability.Infiniband Partitions

When thinking about how we can keep virtual servers (or vServers which equate to the guest operating systems) isolated from each other there is often a statement made that Infiniband Partitions are analogous to VLANs in the Ethernet world. This analogy is a good one although the underpinnings of Infiniband (IB) is very different from the Ethernet routings.An IB fabric will consist of a number of switches, these can either be Spine switches or Leaf switches, a spine switch being one that connects switches together and a leaf being one that connects to hosts. On a standalone Exalogic or a smaller Exalogic cabled together with an Exadata then we can link all the compute nodes, storage heads/Exadata storage cells together via leaf nodes only. In each physical component or host that is connected to the fabric is a dual ported Host Channel Adapter (HCA) card that allows cabling from the host to multiple switches. i.e. there are two architectural deployment diagrams that are possible, as shown below:-

|

| Simple topology - 1 level only |

|

| Spine Switch topologies - 2 Levels |

When we create an IB partition what we are doing is instructing the fabric about which hosts can communicate with other hosts in the fabric over that particular partition. There are a couple of very simple rules to an Infiniband fabric:-

- A full member can communicate with all members of the partition

- A limited member can only communicate with a full member of the partition

|

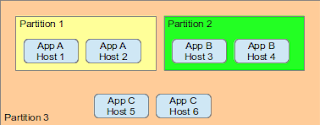

| A Simple Partitioning example |

In this case Hosts 1 & 2 are both full members of partition 1 so app A can talk to both instances. Partition 2 hosts 3 & 4 as full members for App B. Then Partition 3 has hosts 5 & 6 as full members and Hosts 1-4 as limited members, in this way both applications A & B are able to access the services of App C but using partition 3 it is impossible for App A to have any access to App B.

Virtualised Exalogic and Partitions

Now we have an idea of what a partition does lets dive into the details of a virtualised exalogic and consider just how we can create multiple vServers and maintain network isolation between them. We will do this by considering an example topology consisting of two applications each deployed in a 3 tier topology although we will not concentrate much on the DB tier in this blog posting. The first tier being a load balancing "Oracle Traffic Director" set of instances, then an application tier with multiple WebLogic server instances and below that a database tier. The diagram below shows the sort of Exalogic deployment we will consider. Database tier omitted for simplicity. |

| Application Deployments on a virtualised Exalogic |

So in this case the client population is split on different tagged VLANS - Application A using VLAN 100 and Application B using VLAN 101. Both of these VLANS are connected to different "Ethernet over Infiniband" or EoIB networks on the Exalogic rack where two partitions are created with each partition including the special Ethernet "Bridge" ports on the Exalogic gateway switches. Internally each set of vServers is also connected to another private network which is implemented as another IB partition. Should the vServers need access to the ZFS Storage appliance that is held within the Exalogic rack then they are connected to the storage network. The storage network is a special one created on installation of the rack, any connected vServers are limited members with just the storage appliance as a full member, in this way there is no way to connect between vServers on this network.

Now think about the switch setup for each of these networks and how the data is transmitted.

Ethernet over Infiniband Networks (EoIB connecting to vLAN tagged external network.)

These networks can be created as described in the "tea break snippets" what we will concentrate on is what is happening behind the scenes and use some of the Infiniband commands to investigate further. If we consider the connection to the Ethernet VLAN 100 then we can investigate just how the partition has been setup, firstly using the showvnics command to identify the external NICs that are in use and then using the smpartition list active to pick out the partition setup.

| [root@<gw name> ~]# showvnics | grep UP | grep 100 ID STATE FLG IOA_GUID NODE IID MAC VLN PKEY GW --- -------- --- ----------------------- --------------------------- ---- ----------------- --- ---- -------- 4 UP N A5960EE97D134323 <CN name> EL-C <cn IP> 0000 00:14:4F:F8:69:5D 100 800a 0A-ETH-1 38 UP N 4A282D7AEEF49768 <CN name> EL-C <cn IP> 0000 00:14:4F:FB:34:19 100 800a 0A-ETH-1 48 UP N 0C525ADA561F7179 <CN name> EL-C <cn IP> 0000 00:14:4F:FB:22:14 100 800a 0A-ETH-1 49 UP N F9E4DC33D70A0DA2 <CN name> EL-C <cn IP> 0000 00:14:4F:F8:BE:1F 100 800a 0A-ETH-1 30 UP N AE82EFAD4B2425C7 <CN name> EL-C <cn IP> 0000 00:14:4F:F8:55:58 100 800a 0A-ETH-1 25 UP N 73BDD3B88EE8CFE1 <CN name> EL-C <cn IP> 0000 00:14:4F:F9:FA:01 100 800a 0A-ETH-1 50 UP N CC5301F73630E6EA <CN name> EL-C <cn IP> 0000 00:14:4F:F9:5A:85 100 800a 0A-ETH-1 28 UP N D2B7E3F14328A5F6 <CN name> EL-C <cn IP> 0000 00:14:4F:FA:66:7E 100 800a 0A-ETH-1 29 UP N 31E36AB07CFE81FB <CN name> EL-C <cn IP> 0000 00:14:4F:FB:5B:45 100 800a 0A-ETH-1 # |

Display of virtual Network Interface Cards on IB network

We are seeing the virtual NICs that are configured for VLAN 100 on the switch, in this example we have 9 vNICs operational and they are using the partition identified by the hex number 800a. (In my test environment there are more vServers than shown in the architecture diagrams above.) The IOA GUID is reflected on the vServer that is associated with the specific vNIC, this can be shown using the mlx4_vnic_info command on the vServer, with the ethernet NIC matched by the MAC or HWaddr.

| [root@<My vServer> ~]# ifconfig eth166_1.100 eth166_1.100 Link encap:Ethernet HWaddr 00:14:4F:FB:22:14 UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1 RX packets:514101 errors:0 dropped:0 overruns:0 frame:0 TX packets:52307 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:769667118 (734.0 MiB) TX bytes:9041716 (8.6 MiB) #[root@<My vServer> ~]# mlx4_vnic_info -i eth166_1.100 | grep -e IOA IOA_PORT mlx4_0:1 IOA_NAME localhost HCA-1-P1 IOA_LID 0x0040 IOA_GUID 0c:52:5a:da:56:1f:71:79 IOA_LOG_LINK up IOA_PHY_LINK active IOA_MTU 2048 |

Matching GUID of VNIC to GUID in the vServer

We now can consider the actual partition.

| [root@<gateway 1> ~]# smpartition list active ... = 0x800a: 0x0021280001a16917=both, 0x0021280001a1809f=both, 0x0021280001a180a0=both, 0x0021280001a17f23=both, 0x0021280001a17f24=both, 0x0021280001a1788f=both, 0x0021280001a1789b=both, 0x0021280001a1716c=both, 0x0021280001a1789c=both, 0x0021280001a1716b=both, 0x0021280001a17890=both, 0x0021280001a17d3c=both, 0x0021280001a17d3b=both, 0x0021280001a17717=both, 0x0021280001a16918=both, 0x0021280001a17718=both, 0x002128c00b7ac042=full, 0x002128c00b7ac041=full, 0x002128bea5fac002=full, 0x002128bea5fac001=full, 0x002128bea5fac041=full, 0x002128bea5fac042=full, 0x002128c00b7ac001=full, 0x002128c00b7ac002=full; ... |

Partition Membership for partition 800a

What we can see here is that the partition includes 16 port GUIDs that have membership of "both" and a further 8 port GUIDs that are full members. (IB partition membership of both is an Oracle value add to infiniband to allow vServers to be given either full or limited partition membership.)

What I can surmise from this output is that we are dealing with a 1/4 rack, I know this because there are 16 entries with both membership, each physical compute node is dual ported so has two entries in the partition. We can match the GUID to the physical channel adapter (CA) by running the ibstat command on the compute node.

| [root@el2dcn07 ~]# ibstat CA 'mlx4_0' CA type: MT26428 Number of ports: 2 Firmware version: 2.9.1000 Hardware version: b0 Node GUID: 0x0021280001a17d3a System image GUID: 0x0021280001a17d3d Port 1: State: Active Physical state: LinkUp Rate: 40 Base lid: 64 LMC: 0 SM lid: 57 Capability mask: 0x02510868 Port GUID: 0x0021280001a17d3b Link layer: IB Port 2: State: Active Physical state: LinkUp Rate: 40 Base lid: 65 LMC: 0 SM lid: 57 Capability mask: 0x02510868 Port GUID: 0x0021280001a17d3c Link layer: IB |

Infiniband port information on a compute node

There are also the 8 full member entries. These relate to the Ethernet bridge technology of the Infiniband gateway switch. Each switch has two physical ports that allow Ethernet connectivity to the external datacentre and each port is viewed as a dual ported channel adapter, hence each IB switch has four adapter GUIDs or 8 for the pair of gateway switches.

For a virtual Exalogic partitions are enforced at the end points - in the ports of the host channel adapter. The partition plays no part in the routing of traffic through the fabric which is manged by the subnet manager, using the local Identifiers (LIDs). Thus traffic linked to a particular partition can be routed anywhere in the fabric. It is possible to use Infiniband such that each switch maintains a partition table then the switch can inspect the packet headers to match the P-Key in the header and enforce that only packets matching to entries in the partition table are allowed through. This is not done for a virtual Exalogic.

Each HCA has two ports and each port maintains its own partition table which is updated by the subnet manager with all the partition keys that are accessible by that HCA. Thus when a packet comes in the pKey of the header is matched to the local partition table and if no match found then the packet is dropped. So in our example we can see that the partition handling traffic from the external world is effectively allowed to travel to every compute node. This is necessary because the vServer may migrate from physical compute node to compute node and must still be able to communicate with the external world. However how does this help with security and multi-tenancy if traffic can flow to every node?

The answer is that the isolation is solved at a different level. Each vServer gets allocated a virtual function within the HCA. The diagram below shows how the physical HCA can create multiple virtual functions (up to 63) that are then allocated to each virtual machine. This is also the mechanism that provides single root IO virtualisation (SR-IOV) for the optimal performance with flexibility.

From the fabric perspective partitioning is always setup at the physical level. There is one physical partition table per port of the HCA, and Subnet Manager updates this table with pKeys of partitions that are accessible by that HCA. So when packet comes in, the pKey in its header is matched with partition table of the physical port receiving the packet, and if no match is found the packet is dropped. In a virtual Exalogic all compute nodes in the Exalogic are in the partition so traffic is never rejected via this route, unless it has been directed at the storage or another IB connected device such as an Exadata.

So what about isolation for Virtual Machines? Each Virtual Function (pcie function) has its own virtual partition table, this table is not visible or programmed by the Subnet Manager but is by the Dom0 driver. The partition table carries an index of the entries in the physical partition table that are accessible for each virtual function. i.e. a mapping of partitions in the Infiniband network to specific vServers running on the compute node.

Enforcement is achieved by using the inifiniband construct of a queue pair (QP). Queue pairs consist of a pair of send and receive queues that are used by software to communicate between hardware nodes. Each HCA can support millions of QPs and each QP belongs to a pcie function (either a physical function or a virtual function). Assignment is performed by the Dom0 driver on creation of a QP. Each QP can only belong to one partition so when Dom0 creates a queue pair for a vServer on a specific partition it will use a virtual partition table of the VF to ensure this QP can be created. When the QP recieves a packet it checks that the PKey in the packet header matches the pKey that is assigned to the QP.

Or to put it another way, when a virtual machine is started up on a compute node then the configuration of the guest VM interacts with the hypervisor to ensure that the QPs for the guest VM will only be for partitions that it is allowed to communicate over. The allowed partitions defined in the vServers vm.cfg file.

Conclusion

By using Infiniband partitions and the Exalogic "secret sauce" in the virtualised Exalogic we setup secure communication paths where the IB fabric and HCA ensure that traffic on a particular partition can only communicate with the Exalogic Compute Nodes in the environment, then the hypervisor and HCA work in conjunction with each other to ensure that each vServer only has access to the specific partitions that have been administratively allocated to it.In this way it is possible to maintain complete network isolation from each vServer while making use of a shared physical infrastructure.